Grok a Crock

A.I. Cannot be trusted for accurate information, ever..

While researching for No Agenda Show 1701 (a coincident Star Trek reference), I wanted to know if Captain Kirk was ever a Commodore. This was in hopes of coinciding with a special show promotion where the show was conferring Commodore titles on generous producers.

I have used the Twitter AI dubbed “Grok” a few times to find out a fact or two without slogging through the results of search engine. I thought it was a good idea at the time.

Now I am not so sure.

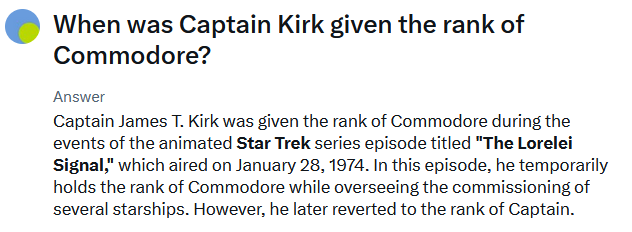

First, regarding Captain Kirk, I queried the product with various forms of the question and discovered that no two answers were alike or even similar. Here are the results:

:

That's a lot of discrepant answers. Do I now have to go back and watch each of these episodes to figure out whether these answers are correct?

From my memory I recall that Kirk may have been a Commodore before he raced off on the U.S.S Enterprise in the first Star Trek movie. The rest, I am not so sure, and neither is Grok from the looks of it.

Then I decided to continue the experiment by asking Grok the exact same question over and over. I assumed that if I ask question A and get a result B, that if I asked A again I'd get the same answer B again. This is folly. AI does not like to be consistent, it seems. In fact, the variations are ludicrous. Look at these results.

So despite the promises of AI – what we are beginning to see is rank nonsense and misinformation if not complete fabrications. Worse, the AI never says anything such as “I might be wrong,” or “This is the best I can do.” It is always cocksure.

These systems should include a disclaimer telling people to do more research and the answers are not reliable.

It's unfortunate that people are being sold on the idea that these answers are correct, when they are incorrect. High school kids using this stuff should beware.

Let's end this AI mania sooner than later. – JCD

Listen to the No Agenda Podcast twice weekly.